Full disclosure: I’m a Peyton Manning fan. If you can’t get past that, stop reading now. Still there? Good, welcome.

Full disclosure: I’m a Peyton Manning fan. If you can’t get past that, stop reading now. Still there? Good, welcome.

Following the Broncos recent loss to the Ravens (and the subsequent Patriots loss), there has been a new wave of the old Manning vs. Brady argument. Clutch vs. choke. Winner vs. can’t-win-the-big-one. Add in another playoff loss for Matt Ryan and a couple big wins for Joe Flacco, and the debate is raging like never before.

If you’re reading this, you’ve probably at least touched on the subject this January. I have. The debate always seems to deteriorate into emotional arguments filled with snarky retorts and anecdotal “evidence”. Tuck Rule game is countered with the Helmet Catch. The Flacco Prayer is answered with the Tracy Porter pick six. And on and on. And on. Every quarterback has been lucky, and every quarterback has been unlucky. Everyone can bring up some argument to support their claim. Without looking at the entire picture, we’ll never reach a valid conclusion. There has to be a better way.

A Clean Slate Continue reading

As we gear up for another NFL season kicking off in just over a week, there will be lots of discussion of Super Bowl contenders and playoff predictions. Which teams will improve and which will decline. One of the big and often over-looked factors in these exercises is a team’s strength of schedule.

As we gear up for another NFL season kicking off in just over a week, there will be lots of discussion of Super Bowl contenders and playoff predictions. Which teams will improve and which will decline. One of the big and often over-looked factors in these exercises is a team’s strength of schedule.

I love the spirit of the blind resume. I hate the execution.

I love the spirit of the blind resume. I hate the execution. Earlier today on CBSsports.com, Matt Norlander wrote an article

Earlier today on CBSsports.com, Matt Norlander wrote an article

The Philadelphia Eagles finished the season 8-8, but outscored their opponents by 68 points, the 5th-best mark in the NFC. Seven of their 8 losses were by one score or less, and they finished the season hot on a 4-game winning streak. Most rankings that try to determine how strong a team truly is had the Eagles as high as the

The Philadelphia Eagles finished the season 8-8, but outscored their opponents by 68 points, the 5th-best mark in the NFC. Seven of their 8 losses were by one score or less, and they finished the season hot on a 4-game winning streak. Most rankings that try to determine how strong a team truly is had the Eagles as high as the

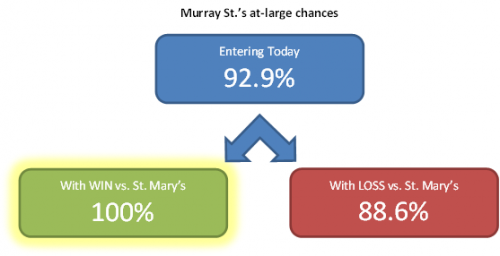

Around this time of year, there’s lots of talk about who’s in and who’s out and who’s on the bubble. Plenty of chatter about what may or may not get your team into the Big Dance. Tons of discussion of big wins and bad losses.

Around this time of year, there’s lots of talk about who’s in and who’s out and who’s on the bubble. Plenty of chatter about what may or may not get your team into the Big Dance. Tons of discussion of big wins and bad losses.